NMIMS MPSTME Student Develops AI Model for Sign Language Translation, Empowering the Hearing and Speech-Impaired Community

Preeyaj Safary, a talented and compassionate 19-year-old BTech student at NMIMS Mukesh Patel School of Technology Management & Engineering (MPSTME), Mumbai, has created a groundbreaking artificial intelligence (AI) model that translates sign language into text, revolutionizing communication for individuals with hearing and speech impairments.

Preeyaj's innovative technology utilizes the power of AI to bridge the communication gap, enabling seamless interaction with the larger society.

Inspired by a visit to a restaurant where he witnessed the challenges faced by deaf and mute individuals in communicating with the outside world, Preeyaj embarked on a mission to develop a solution that would make their lives easier.

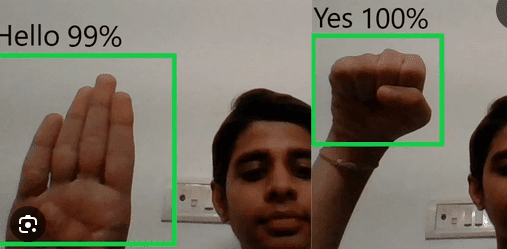

The AI model captures sign language gestures through a camera, and by comparing them to a comprehensive database of sign language images, it accurately translates the gestures into written text displayed on a connected screen.

The model not only provides the words corresponding to the signs but also indicates the accuracy level of the translation, ensuring effective communication.

Preeyaj collected a diverse range of sign language images from various sources, including online platforms and interactions with individuals associated with NGOs working in this sector.

The AI model's database continues to expand as he works diligently to include more signs, and the increased usage of the technology will further contribute to enhancing the database's accuracy and completeness.

In addition to his remarkable achievements in sign language translation, Preeyaj had previously developed a computer program to assist elderly individuals in booking vaccination slots during the COVID-19 pandemic. His commitment to leveraging technology for the betterment of society is evident in his tireless efforts to address critical societal needs through innovative solutions.